“Fraudulently made robocalls have the potential to devastate voter turnout by flooding thousands of voters with intimidating, threatening, or coercive messages in a matter of hours.”

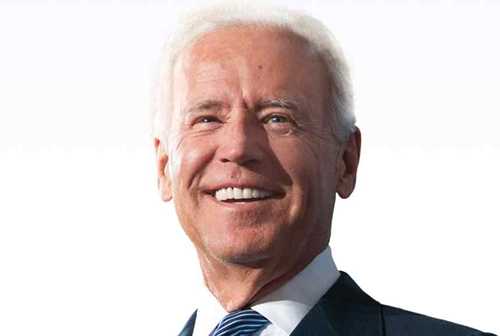

New Hampshire residents and voting rights groups on Thursday launched a federal lawsuit against a Democratic consultant and two companies behind January robocalls featuring audio that mimicked Democratic U.S. President Joe Biden’s voice using artificial intelligence to discourage recipients from participating in the state’s primary election.

“These types of voter suppression tactics have no place in our democracy,” declared Celina Stewart, chief counsel at the League of Women Voters (LWV) of the United States. “Voters deserve to make their voices heard freely and without intimidation.”

“For over 100 years, the League of Women Voters has worked to protect voters from these unlawful crimes and will continue to fight back against bad-faith actors who aim to disrupt our democratic system,” added Stewart, whose group is part of the case.

The complaint—filed by the nonprofit Free Speech for People (FSFP) and a pair of law firms on behalf of three voters as well as the state and national arms of the LWV—accuses consultant Steve Kramer, Life Corporation, and Lingo Telecom of violating New Hampshire election laws along with the federal Telephone Consumer Protection Act and Voting Rights Act with the robocalls.

“These deceptive robocalls attempted to cause widespread confusion among New Hampshire voters,” noted Liz Tentarelli, president of the state’s LWV. “As a nonpartisan organization, the League of Women Voters works to ensure that all voters, regardless of their party affiliation, have the most accurate election information to make their voices heard. We will continue to advocate for New Hampshire voters and fight against malicious schemes to suppress the vote.”

NBC reports that NH voters are getting robocalls with a deepfake of Biden’s voice telling them to not vote tomorrow.

“it’s important that you save your vote for the November election.”https://t.co/LAOKRtDanK pic.twitter.com/wzm0PcaN6H

— Alex Thompson (@AlexThomp) January 22, 2024

Looking toward a November election in which Biden is expected to face Republican former President Donald Trump, voting rights advocates and artificial intelligence experts are sounding the alarm about the potential impact of AI, especially deepfakes—audio or video that convincingly appears to show someone doing or saying something they never did.

“Fraudulently made robocalls have the potential to devastate voter turnout by flooding thousands of voters with intimidating, threatening, or coercive messages in a matter of hours,” warned FSFP senior counsel Courtney Hostetler. “No one should abuse technology to make lawful voters think that they should not, or cannot safely, vote in the primaries or in any election. It is an honor to represent the League of Women Voters and the other plaintiffs in this important case to protect the right to vote.”

The complaint asks the U.S. District Court for the District of New Hampshire for a permanent, nationwide injunction to prevent Kramer and both companies “from producing, generating, or distributing AI-generated robocalls impersonating any person, without that person’s express, prior written consent,” as well as monetary and punitive damages.

The Associated Pressreported that “a spokesperson for Kramer declined to comment on the lawsuit, saying his attorneys had not yet received it. Lingo Telecom and Life Corporation did not immediately respond to messages requesting comment.”

After the New Hampshire robocalls started getting national media coverage, the state Attorney General’s Office and Federal Communications Commission began investigating, which resulted in cease-and-desist orders. The FCC also announced last month a rule declaring such calls are illegal under the Telephone Consumer Protection Act.

While welcoming the move, Robert Weissman, president of the consumer advocacy group Public Citizen, noted that “the act’s prohibition on use of ‘an artificial or prerecorded voice’ generally does not apply to noncommercial calls and nonprofits. So the FCC’s new rule will not cure the problem of AI voice-generated calls related to elections.”

Public Citizen and other critics of influencing elections with artificial intelligence have demanded action from Congress and the Federal Election Commission‚ whose chair, Sean Cooksey, said in January the FEC “will resolve the AI rulemaking by early summer.”

Common Dream’s work is licensed under a Creative Commons Attribution-Share Alike 3.0 License. Feel free to republish and share widely.

[content id=”79272″]